Query Streams for Apache Pinot

Lightweight tool to push real-time query results from Apache Pinot to webhooks for seamless UI integration.

Working across real-time streaming technologies like Apache Kafka, Flink, and Pinot has been one of the most exciting aspects of my career. Especially in the past couple of years, it’s been incredible to see some of the interactive analytics apps built on custom UIs powered by Apache Pinot. With its purpose-built architecture, Pinot enables developers to query massive datasets at scale, build stunning visualizations, and handle jaw-dropping QPS with ease. The real-time dashboards and UI-driven analytics being built today are so damn powerful—they’ve completely redefined what’s possible when it comes to delivering actionable insights directly to users.

But in talking to developers, especially UI engineers, it became clear that while Pinot is an incredible backend for real-time analytics, there’s an opportunity to simplify how its power is harnessed by the app developers. Developers often find themselves building custom microservices to fetch, transform, and deliver data to their applications—services that can be repetitive, resource-intensive, and disconnected from Pinot’s core strengths. Query Streams addresses that gap.

Of course, this is not to say that this is a robust pattern for every use case. In some cases, it might still be necessary to build a microservice depending upon the complexity and the business logic involved in your application. But for many teams, abstracting that glue logic improves efficiency and streamlines development –no pun intended. Query Streams is a simple tool I put together to allow UI developers to easily build and deploy this abstraction . There is a lot we can add to this and any thoughts/comments/code are always welcome :)

Query Streams

Query Streams for Apache Pinot is a lightweight tool that lets UI/application developers rethink how data flows from Pinot to their applications. Instead of pulling data on demand, why not flip the script and push it? Imagine your dashboards, heat maps, or widgets powered by streams that query Pinot for you and deliver the results to webhooks in real-time. It simplifies your UI interfaces, while making the most of Pinot’s raw analytical power. Today, the tool supports webhooks. We can expand to support lot more –Kafka, MQs, notifications, alerts—anything is possible. For now, have fun publishing data to a webhook, build some cool apps, and show them off!

Highlights / Benefits

1. Simplifies Data Delivery to the Frontend

Effortlessly query Apache Pinot in real-time and push data to webhooks. By streaming query results directly to dashboards, heat maps, and widgets, developers can build lightweight UI while leveraging Pinot’s scale to power seamless real-time analytics.

2. Reduces Redundancy with Built-In Deduplication

When query results don’t change, Query Streams is intelligent enough toskip pushing data to the UI, reducing redundant traffic and saving costs on QPS. This push-first model minimizes the burden on UI components, keeping them lean and efficient while still delivering timely updates when data changes.

3. Gives Developers Fine-Grained Control

Throttle query execution, manage deduplication settings, and monitor real-time metrics through an intuitive set of APIs. With built-in tools for operational control, developers can streamline their workflows, stay focused on building user-facing apps, and let Pinot handle the heavy lifting in the background.

What’s supported today ?

Stream Creation and Management: Create, start, stop, update, and delete streams using APIs with a unique StreamID for identification.

Apache Pinot Integration: Query Apache Pinot periodically and send results to external systems like webhooks, with support for dynamic query configurations.

StarTree Free Tier Support: Supports integration with StarTree Free Tier using Bearer tokens for authentication.

Deduplication and Throttling: Skips redundant query results to reduce QPS and ensures efficient delivery to webhooks, with configurable deduplication and throttling.

Metrics Tracking: Tracks events sent, deduplicated events, and query execution counts with a combination of in-memory caching and periodic file-based persistence.

File-Based State Store: Stores stream configurations and states to ensure streams are preserved and resumed across crashes or restarts.

Basic Dashboard: A minimal dashboard displaying the list of streams and their associated metrics

Upcoming Features

Multicast Support: Send data to multiple destinations for each stream, enabling integrations with webhooks, Kafka, and other external systems.

Kafka Support: Publish stream results to Kafka topics to expand compatibility with real-time processing systems.

Advanced UI for Stream Management: Improved user interface to simplify stream creation, monitoring, and lifecycle management.

Webhook Authentication: Add token-based or API key authentication to secure webhook integrations.

Custom Query Transformations: Enable result transformations (e.g., filtering, aggregation) before delivering data to destinations.

Error Handling and Alerts: Send alerts to developers or teams when streams encounter errors, such as failed queries or webhook delivery issues.

Inference Support: Ability to enrich the outgoing messages with AI / other APIs before hitting the UI

Stream Tags and Metadata: Allow tagging streams with metadata for better organization and searchability.

Parameter Support: Allow query parameters to be passed dynamically from the UI, enabling greater flexibility in building interactive and personalized analytics experiences.

Getting Started

Clone the Project

git clone https://github.com/avoguru/qstreams.git

cd qstreamsRun with Docker

docker build -t qstreams .

docker run -p 8080:8080 qstreamsDeploy to a Cloud Service

Push the Docker image to your preferred registry (e.g., Docker Hub, AWS ECR, or GCP Artifact Registry), and deploy it to any Docker-supported platform like Google Cloud Run, AWS ECS, or Azure App Service.

Coming Soon

A pre-built Docker image will soon be available for direct download from Docker Hub to simplify the setup process further.

Create a Stream

Define your stream with its query, destination, and configuration. Each stream is assigned a unique stream_id upon creation.

Sample request

curl -X POST http://localhost:8080/streams \

-H "Content-Type: application/json" \

-d '{

"name": "stream1",

"pinot": {

"query": "SELECT * FROM SampleTable LIMIT 10",

"broker_url": "https://broker.pinot.celpxu.cp.s7e.startree.cloud/query/sql",

"query_interval": 1000,

"authentication": {

"Authorization": "Bearer YOUR_TOKEN",

"database": "YOUR_DATABASE_NAME"

}

},

"destination": {

"type": "webhook",

"url": "https://example.com/webhook",

"authentication": {

"API-Key": "YOUR_API_KEY"

}

},

"dedupe": {

"enabled": true,

"duration": 30000

}

}'Sample response

{

"message": "Stream created successfully",

"stream_id": "67e2134d-a925-49a4-ac47-11b14ce4b603"

}List All Streams

List all the streams available in the server

Sample request

curl -X GET http://localhost:8080/streamsSample response

{

"streams": [

{

"stream_id": "67e2134d-a925-49a4-ac47-11b14ce4b603",

"name": "stream1",

"query": "SELECT * FROM airlineStats LIMIT 10",

"broker_url": "http://localhost:9000/sql",

"destination_type": "webhook",

"destination_config": "https://example.com/webhook",

"interval": 1000,

"state": "running",

"dedupe": true,

"dedupe_duration": 30000

}

]

}View Metrics

Monitor real-time metrics for all streams, such as the number of events sent, deduplicated, and queries executed.

Sample request:

curl -X GET http://localhost:8080/metricsSample response:

{

"streams": [

{

"stream_id": "67e2134d-a925-49a4-ac47-11b14ce4b603",

"events_sent": 10,

"events_deduped": 2,

"number_of_queries": 12

}

]

}Manage Stream Lifecycle

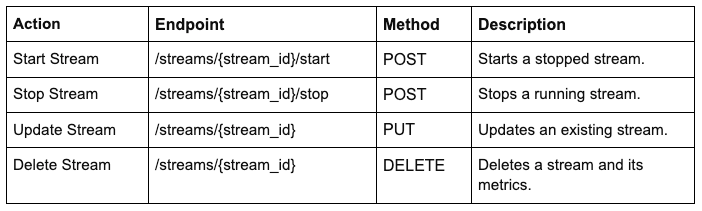

The following APIs allow you to manage the lifecycle of your streams, including starting, stopping, updating, and deleting them.

Example Usage

Sample request (Start stream):

curl -X POST http://localhost:8080/streams/{stream_id}/startSample response:

{ "message": "Stream started successfully", "stream_id": "{stream_id}" }Console Access

http://localhost:8080/console/Useful links:

Repo: https://github.com/avoguru/qstreams

Story of Apache Pinot: https://startree.ai/resources/from-grape-to-glass-apache-pinot-graduates-like-a-fine-wine